t🎂rtchens-welt.

Terrain Ruggedness Index

The terrain ruggedness index (TRI) shows the elevation difference between adjacent cells (Moore neighborhood) of a digital elevation model (DEM). TRI is simple to calculate using the published equation. However, this publication contains some errors. First, the DOCELL command in figure 1 is wrong, as noted in the Erratum. But second and more crucial, the examples in figure 3 are wrong, too. This is not noted in the Erratum. Now, a colleague of mine implemented this index and used the examples to write his/her tests. Let me say he/she was majorly successful in making the implementation comply with the incorrect examples. I am grateful that neither we nor the authors work in a field on which people’s lives depend. But wait, what about Boeing…

Global Administrative Units

I know two global administrative regions/units (states, provinces, whatever) datasets: Natural Earth and GADM. The latter provides administrative units on six levels, from country scale down to the smallest administrative unit available for a selected country. Unfortunately, not all spatial units have an ISO 3166-2 code (for me, super crucial). On the contrary, Natural Earth provides an ISO code for all spatial units. However, some codes do not comply with the most recent version (e.g., Poland). Further, it is not transparent on which administrative level the units are provided for a selected country; for example, for Italy, the regions are on level two, but for Germany, they are on level one. Welcome to consistency land. In the end, it comes to selecting the least pain in the ass in terms of the dataset you want to use.

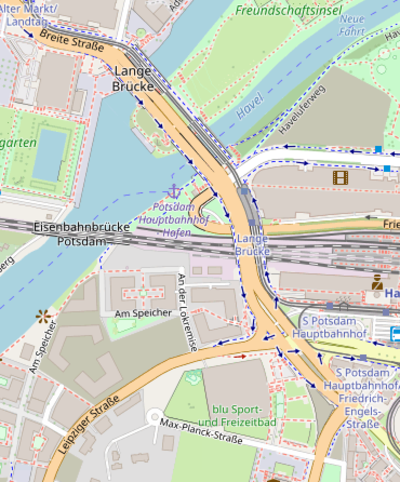

Street Names

This is a reminder to stay sane if I quickly want to know the name of a street by checking the maps of one of the online map providers. In such a situation, I should use Open Street Map! Neither Google Earth nor Google Maps consider it a valuable detail to display the street names, as the first two images below show (Google Maps and Earth). The last one is from Open Street Map.

Mal Élevé

Today, I attended a concert by Mal Élevé, a former member of Irie Révoltés, in the Lustgarten in Potsdam. The music was quite fun and very close to the sound of Irie Révoltés, according to my musically uneducated opinion. However, what was not so much fun was that the lead singer introduced nearly every song with a negative statement about the time we were living in or marginalizing some group. For example, the singer said our current lived reality is extraordinarily shitty or that everyone in the police is a racist bully. Quite a positive attitude for a concert. I used this as a chance to train my capability to block negative thoughts while staying open-minded and to be honest; I was pretty successful. In the aftermath of the concert, I started to contemplate how complex charged things like zeitgeists or racism in the police could be discussed without oversimplification and keeping a positive spin. Yet I found no solution…

Blogosphere

Diego mentioned that my blogosphere is missing. Frankly, I believe he wants to get linked for some search engine optimization and therefore prepare for his future career as an influential influencer. I don’t want to put a spoke in his wheel, so here you find my blogosphere:

Cooling and heating degree days with CDO

Recently, at work, I had to calculate cooling and heating degree days per annum for future climate projections. Eurostat provides both metrics only for the past. I found a convenient option to calculate using a bash script and CDO for flexible base/threshold temperatures.

eating degree days for a certain day are defined as HDD(i) = b - t(i) if t(i) < b, where b is the base temperature and t(i) is the temperature at the selected date. Cooling degree days are defined as CDD(i) = t(i) - b if t(i) > b. You may sum both to the required timescale, e.g., monthly, seasonal, or yearly. The bash script below is commented to explain the relevant steps.

K=273.15

# Calculates annual Heating Degree Days (HDD)

# $1 Base temperature in Celsius

# $2 Temperature data in Kelvin (K)

# $3 Name of the output

function hdd {

local threshold=${1-}

local file=${2-}

local path=${3:-}

# HDD(t, x) = threshold - file(t, x) if file(t, x) < threshold

# -yearsum := Calculate yearly sum of HDDs

# -addc,"$threshold" -mulc,-1 := threshold - file(t, x) = threshold + (-1 * file(t, x))

# -setvrange,"$(bc <<<"-1 * $K"),$threshold" := if file(t, x) < threshold; set each value smaller than absolute zero

# OR greater than threshold to missing

# -subc,"$K" := Convert Kelvin to Celsius

echo "HDD... $file to $path"

cdo \

--timestat_date first \

-yearsum \

-addc,"$threshold" -mulc,-1 \

-setvrange,"$(bc <<<"-1 * $K"),$threshold" \

-subc,"$K" \

"$file" "$path" >/dev/null 2>&1

}

# Calculates annual Cooling Degree Days (HDD)

# $1 Base temperature in Celsius

# $2 Temperature data in Kelvin (K)

# $3 Name of the output

function cdd {

local threshold=${1-}

local file=${2-}

local path=${3:-}

# CDD(t, x) = file(t, x) - threshold if file(t, x) > threshold

# -yearsum := Calculate yearly sum of CDDs

# -subc,"$threshold" := file(t, x) - threshold

# -setrtomiss,"$(bc <<<"-1 * $K"),$threshold" := if file(t, x) > threshold; set each value smaller than threshold

# AND greater than absolute zero to missing

# -subc,"$K" := Convert Kelvin to Celsius

echo "CDD... $file to $path"

cdo \

--timestat_date first \

-yearsum \

-subc,"$threshold" \

-setrtomiss,"$(bc <<<"-1 * $K"),$threshold" \

-subc,"$K" \

"$file" "$path" >/dev/null 2>&1

}

In my case, I use daily mean temperatures from the EURO-CORDEX dataset provided for download by the climate data store, a base temperature of 15℃ for HDDs, and 21℃ for CDDs.